The series dedicated to the new NEx64T architecture has come to an end, after being compared with the most well-known and important ones. Below is a list of previous articles, to make them easier to retrieve:

NEx64T – 2: opcodes structure for simple decoding

NEx64T – 3: length of instructions and instructions executed

NEx64T – 4: a look at the new architecture

NEx64T – 5: addressing modes and immediate values

NEx64T – 6: the legacy of x86/x64

NEx64T – 7: the new SIMD/vector unit

NEx64T – 8: comparison with other architectures

From the list, one can also guess how the new ISA addresses the most important points in this area, to which special emphasis must be placed on the inheritance that drags on from x86 and x64, but which has been appropriately scaled down as a result of particular choices that have been made at the design level, so as to minimise its impact in all the most important areas (silicon / number of transistors, consumption, performance).

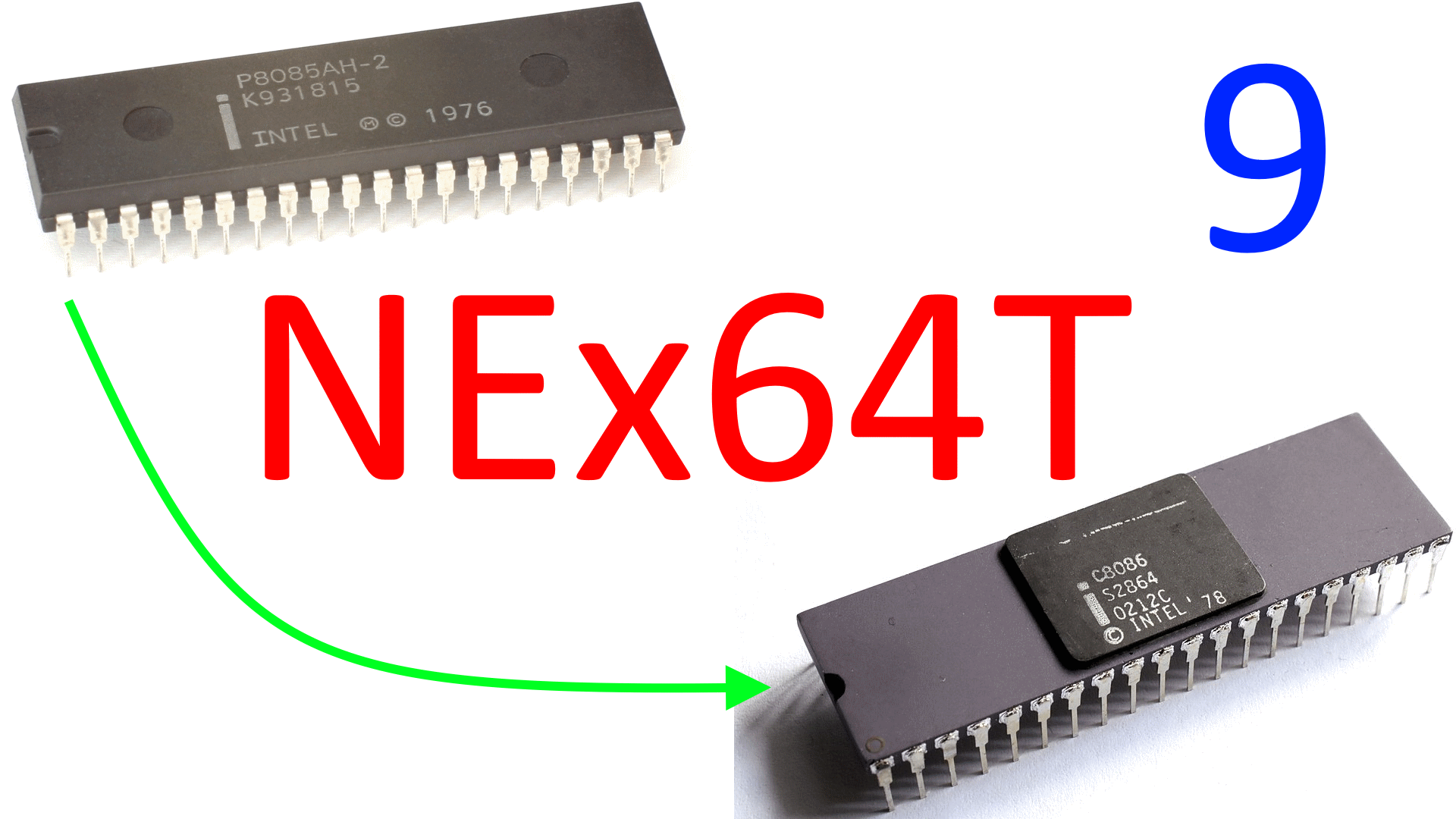

In fact, it is undoubtedly (as well as the main objective for which it was clearly born), that it wants to replace the latter, getting rid of a lot of obsolete stuff weighing down the processor cores, and at the same time bringing in a considerable amount of innovations in order to be much more competitive than they are now, given that the competition is becoming increasingly fierce.

From this point of view, it must be emphasised that, in addition to the enormous software library available for it, the strong point of these two architectures was their high performance, thanks to the investments made mainly by Intel and AMD to bring them up to speed with the more blazoned L/S (ex-RISC) architectures, in particular starting with the 80486 (one of the two “anti-RISCs”. The other was Motorola’s spectacular 68040).

Which is hardly surprising, given the hunger for performance that technological progress has demanded in order to satisfy the ever-increasing needs of society as a whole, leading us to the levels of comfort and well-being that we now enjoy (and which it is hard to think of giving up, having “tasted” them).

The fulfilment of these requirements has essentially come down to two fundamental points: clock frequencies (which are increasingly higher) and the number of executable instructions per clock cycle (IPC), which, very (very!) roughly speaking, have been used as a “performance index” (with the infamous metric represented by MIPS).

Factors which, in recent times, have been joined by chip consumption, since it is no longer possible to scale indefinitely in terms of performance while completely ignoring how much current needs to be supplied to power these increasingly voracious devices.

The Holy Grail of single-core performance…

If ever higher frequencies were guaranteed by the adoption of ever more advanced production processes and power consumption was not a problem at that time (also and above all thanks to such production processes), the same could not be said at all about the IPC.

All the more so in view of the fact that, at the time of the “home” computing boom (late 1970s – early 1980s), microprocessors were not used to executing multiple instructions per clock cycle, but, on the contrary, instructions required multiple clock cycles to be executed.

The primordial RISC concept substantially contributed to the introduction of the IPC concept, as it represents one of the four pillars (executing instructions in one clock cycle) on which this macro-family of architectures is based (as already extensively discussed in a recent series of articles), obviously at the time when they switched to executing more instructions per clock cycle.

This further contributed to making the evaluation of processor performance more difficult, with the aforementioned MIPS progressively losing all meaning as a metric to be used to measure it, which gave way to several benchmarks born to take its place, among which the notable SPEC (which has become the de facto industry standard) must be strictly mentioned.

In any case, and whatever the reference metric, the goal of CPU manufacturers (at least of the mainstream / more “general” ones) has always been to seek better performance, which until the advent of multithread & multicore systems concerned, in fact, a single core and a single hardware thread (speaking of SMT), and for this reason we refer, in this case, to single-core/thread performance.

An area in which, and as already mentioned, Intel and AMD’s processors imposed themselves on the more renowned competition, when the engineers of these companies found a way to effectively implement pipeline and superpipeline, with the aforementioned 80486 first and with Pentium and, above all, Pentium-Pro later.

On the other hand, it was quite obvious that it would come to this, since, and as already discussed several times in several previous articles, CISC processors are, in general, able to perform more “useful work” than L/S architectures, requiring on average fewer instructions to implement and complete a given task.

This consequently means fewer instructions to be loaded & decoded in the frontend, reducing its complexity (from this point of view). One only has to compare the microarchitecture diagrams of the processors to realise this immediately: it is glaringly obvious!

NEx64T, as a redesign & extension of x86/x64, enters powerfully into the tradition of performing much more “useful work” in instructions, positioning itself even better than the competition (including the two architectures it aims to replace).

In this regard, previous articles providing a general overview of instructions and the SIMD/vector unit have shown the enormous potential of this new architecture from this point of view, from which it is already possible to take note of the amount of “useful work” that can be performed by individual instructions.

It is therefore evident how much better it can do in the performance sphere, even with smaller frontend and backend, with the clear aim of achieving leadership.

…even with in-order architectures!

This is even more true if we take into account in-order microarchitectures, which do not allow instructions to be executed using any speculative technique (proper to out-of-order microarchitectures, instead), but their processing is done in a strictly sequential and ordered manner, even if it is possible to do so for more than one per clock cycle.

The demonstration of this is quite trivial, taking an instruction like this one:

ADD.L [R0 + R1 * 4 + 0x1245678], 0x9ABCDEF0 ; .L = 32-bit integer

that is able to operate directly with memory, which could also be executed in a single clock cycle (depending on the microarchitecture and, of course, if the data to be added to the immediate value is already in memory) in a CISC architecture such as x86/x64 or NEx64T.

The equivalent for a L/S ISA is represented by a mini-subroutine (the example is purely theoretical/representative and does not refer to any architecture in particular), assuming that the registers can be freely reused (R0 in particular, which is overwritten):

LOAD R2, [GLOBALS_REG + OFFSET_0x1245678]

ADD R0, R0, R2

LOAD R2, [R0 + R1 * 4]

LOAD R3, [GLOBALS_REG+ IMMEDIATE_0x9ABCDEF0]

ADD R2, R2, R3

STORE R2, [R0 + R1 * 4]

Obviously, these considerations also apply to out-of-order processors, where L/S processors can mitigate the performance impact (having to execute as many as six instructions instead of the single one of CISCs) by being able to execute some of them in parallel, but the point is that CISCs not only manage to do better anyway (and without any particular effort: it is in their intrinsic nature!), but it is precisely with in-order architectures where the situation becomes dramatic for L/S processors to say the least, and the advantages of CISCs are absolutely enhanced.

This can be seen in the study that was reported in the previous article, where Intel’s 2-way in-order Atom tore through its (at the time) 2-way ARM equivalents (even out-of-order!) and competed with the 3-way out-of-order one. So what we are talking about here are not hypothetical scenarios, but very concrete ones.

Security: side-channel attacks

It may seem anachronistic to stress the discussion on the performance of in-order processors when mainstream systems have already been based on out-of-order microarchitectures for several decades, but there are a couple of not insignificant considerations to be made.

The first is that a very large proportion of processor-integrated devices make use of in-order microarchitectures, because having higher absolute performance is no more important than maintaining low power consumption and/or having small cores (which require less silicon and are therefore much cheaper to produce).

The second is that the recent discovery of side-channel vulnerabilities has uncovered the Pandora’s box represented by the many and varied speculative execution techniques employed by out-of-order processors to achieve the best possible performance.

Let it be clear that processors affected by such vulnerabilities would not be faulty at all, as long as they implement the ISA correctly. The processors do, in fact, work properly. The problem lies in the particular implementation that also allows information to be obtained that otherwise would not be accessible: a vulnerability, therefore, but not a design bug.

That being said, in-order microarchitectures are very important from this point of view, because they apparently do not suffer from these attacks (at least, none have been found, but there is no proof that they are completely free of them. This is from memory, at the time of writing this article).

Which means that such processors could be preferred over other out-of-order processors, if security played a more important role than performance. Hence it is, to say the least, obvious that CISC processors are in an absolutely more advantageous position than L/S processors, as we have seen.

It is even better with NEx64T, which, as we have already seen, allows as many as two operands to be referenced directly in memory, making it possible to reduce even further the number of instructions required and thus excel with in-order microarchitectures.

One source of side-channel problems is SMT implementations, due to the sharing of part of the resources in a core. Rumours have recently circulated that Intel is in the process of abandoning this technology in future processors.

The reason for this is not to get rid of any possible vulnerability that might arise from this technology, but to concentrate on the new low-power cores (called E-Core in the company’s nomenclature).

Whatever the reason for the discontinuation of Hyper-Threading, it must be said that NEx64T proposes a new and more “creative” way of using this technology to improve single-core performance. No details have been provided (although the presence of a “secret sauce” had already been hinted at in one of the previous articles) for intuitable reasons, but this innovative feature would also be among the points in favour of this new architecture.

The end of Moore’s Law

Other reasons promoting their adoption are “collateral” and related to what has been discussed so far, as a direct consequence if we add another extremely important element when talking about processors: production processes.

We know how absolutely fundamental they have been in the evolution of processors and what benefits they have brought to the development of our civilisation, but the race for ever-better processes has slowed down considerably in recent years, due to issues that arise when using transistors of ever smaller size (and with quantum effects starting to become more relevant).

On the other hand, and whatever Intel, which has based its success on it, says, the approaching limits of matter will inevitably lead to the end of the so-called Moore’s Law, at least as it was formulated (i.e. linked to the progressive reduction in transistor size).

Other ways are being sought (photonics, use of alternative materials to silicon) to go further and continue the progress of chips, but even in this case it will still be a matter of pushing back the date by a few more years, because one cannot indefinitely bend matter to the needs of industry. “It is inescapable…”

When this happens, single-core/thread performance improvement will become more problematic, and architectures that are naturally advantaged in this respect will be the ones it makes sense to focus on, as far as discussed so far.

It is important to emphasise that the single-core/thread metric remains absolutely in the foreground when it comes to processor performance, despite the fact that for several years now, the phenomenon of multicore processors has exploded, with some even integrating hundreds of them.

The reason is quite simple: not all algorithms are “parallelisable”. Far from it! It is certainly true that a lot has already been done (and continues to be done) to try to exploit as many cores as possible and in the best possible way, but this is only possible when an algorithm allows it, otherwise even the presence of thousands of cores becomes completely irrelevant.

That is why having processors capable of excelling in single-core/threads is and will remain very important. On the other hand, and if this were not the case, GPUs, which now have tens of thousands of cores inside them, would have taken the place of classical CPUs long ago.

The return of “low-level” programming

For reasons related to the above, a return to assembly language programming or intrinsics equivalents is to be expected. Certainly not for entire applications, because that would certainly be insane (unless you do it for pure enjoyment. But that’s a different kettle of fish), but getting your hands on critical parts to optimise them better (perhaps even with the help of compilers, looking at the code they have generated) when nothing else can be done because technological progress has come to a standstill, is a far from dystopian scenario.

One must be more royal than the king, in short: what else could be done if processors can no longer (consistently) improve single-core/thread performance? It is clear that we will have to review the code already written, identify its critical points, and try to optimise them as much as possible (at an algorithmic level first, and gradually going down to a lower level).

From this point of view, the most attractive and pleasant architectures to program are undoubtedly the CISC ones, as they require the writing of fewer lines of code/instructions to implement certain algorithms.

This task is also made easier due to the presence of more complex memory addressing modes, as well as the fact that most instructions are also able to directly access memory (another simplification for developers).

Programming in assembly with CISC processors has always been simpler and more “digestible”, and ISAs such as those of the Motorola 68000 family have sublimated the concept, making the joy of thousands of enthusiasts who still today enjoy writing entire applications or games with this language “like in the old days”.

NEx64T follows in the wake of this tradition, offering an architecture that is much simpler and more convenient to program even in assembly, thanks also to a strong inspiration from the aforementioned 68000 (of which I am still a great admirer).

Plans to bring NEx64T to market

Having ascertained that this new architecture has all the credentials to play a major role, it is unthinkable that it could be introduced point blank within solid ecosystems for which, as discussed in other articles, it is difficult to make a change of this magnitude, especially in the short term.

There are, however, areas where NEx64T lends itself well, thanks to the fact that it has been designed to be 100% compatible at assembly code level with x86 and x64. Once assemblers, compilers, system libraries (libc above all) and debuggers have been implemented, in fact, it is relatively simple to obtain executables for this new ISA, since in almost all cases a trivial recompilation will suffice.

This means that it could be quickly adopted for Sony’s and Microsoft’s next-generation consoles, since binary compatibility with x64 (which is the ISA they use now) is not indispensable for the new consoles.

Furthermore, making emulators to run games from previous consoles is far simpler than for other architectures, as x64’s registers and instructions map directly to the NEx64T equivalents and work in exactly the same way. This should bring performance in line with the original architectures, while also keeping power consumption at bay.

Similarly, it could also be used in servers, embedded or IoT devices, where binary compatibility with x86/x64 is not necessary and, therefore, no emulators need to be built either.

Replacing current server and desktop systems that are strictly dependent on x86/x64 is the longer-term goal because it requires more time, as the software library is huge and there are cases that make explicit use of and reference to the opcodes of these architectures.

A classic example is applications or games that make use of JIT compilers that generate x86 or x64 binaries on the fly. In this case, it is essential to change the JITer so that it generates the equivalents for NEx64T.

Fortunately, these are not very common scenarios (given the totality of the available software pool), but they are still very important and used, so investment is needed to port them to the new ISA.

IP/Licensing and patents

As a counterbalance to the necessary investments, however, there are undoubted benefits resulting from the fact that NEx64T is a completely new IP, so that no licence fees need to be paid to Intel and/or AMD in order to implement and use it.

The fact that it is x86/x64 compatible at assembly level does not in fact mean that it uses exactly the same opcodes or IPs as these architectures. From this point of view, the ISA is completely different, with opcodes that have nothing to do with those of the other two architectures.

The only common element is the content of the registers (flag, control, debug, system), which must match (otherwise the recompiled code would not work), but this is not dissimilar to what happens with x86/x64 emulators.

Having a completely different opcode structure also means being in the opposite situation, i.e. having a new IP that has its own market value and can therefore be licensed to anyone interested.

Another non-negligible factor is the possibility of applying for patents for certain particularly creative and innovative solutions in the architecture (e.g. for some “compact” instructions), while at the same time making it possible to “armour” their use by third parties. This too is a considerable added value for anyone wishing to invest in the project.

Conclusions

With this last thought, the gauntlet is thrown down mainly to Intel and AMD, which are the main companies that could and should be interested in replacing their ISAs with this new one, as their products have accumulated too much legacy that weighs down their implementation, no longer allowing them to be as competitive as in the past.

This is also due to the fierce competition, which has been churning out noteworthy products in recent years (just think of Apple with its Mx processors, with which it has basically beaten Intel to the punch, completing the process of “in-house” production of all the components of its devices).

It must be remembered, however, that NEx64T was initially born as an answer to the question of whether it was possible to make a 64-bit extension of x86 that was much more efficient than the x64 extension that AMD came up with. The answer I think is obvious.

The other challenge was to demonstrate to the unabashed supporters of RISC processors (which are no longer RISC processors, but exclusively L/S processors, as has been amply demonstrated in another series of articles) that yes: CISC processors are still being designed today, which have much more potential and are far more interesting, and all the more so in view of the future.

It must be pointed out, however, that NEx64T is an architecture that was not born completely free, but subject to the constraint of being fully compatible, at assembly source level, with x86 and x64, including a part of their legacy (as explained in the appropriate article). Many architectural and structure choices of opcodes derive, in fact, from such constraints.

Just to be clear, with RISC-V, designers had a completely free hand to decide every single aspect of this architecture, also exploiting the opcode space as they saw fit.

This was not possible with NEx64T, otherwise different choices (even by a lot) would have been made for certain aspects. Without such “chains”, one could have done better, in short, by realising a simpler but also more efficient and performant CISC, but at the cost of losing the advantage of recompilation of any x86/x64 source.

This, however, is another story, and that of NEx64T ends here.