The previous article on the size of the data in memory that can be read or written by the chipset concluded the part of the changes that, although small, could have helped to improve and make our fabulous platform much more competitive, relaunching it.

In reality, there is a third factor, in addition to the clock frequency and memory bus, that would have allowed us to go much further, but before talking about it, I think it is necessary to take a look at what Commodore did or thought of doing, in order to understand how it moved towards the same goals. This is also because it allows us to open a veil on this factor and understand why it is so crucial.

1985-1986: Ranger

The first shake-up was attempted by the machine’s main designer himself, Jay Miner, who worked on a project that was internally called Ranger. There are no precise sources on the period of work, but we speak of 1985 for the beginnings and go as far back as 1989, but it seems that the project was cancelled internally in mid-1986 and some of its parts merged into another one concerning high-definition.

Unfortunately, this was a too ambitious task for the time. The figures circulating speak of support for resolutions up to 1024×1024 with 128 colours. Exorbitant numbers if we consider the 320×200 at 64 colours (but with a palette of 32 colours. The 64 are obtained with the EHB mode) of the original machine.

To give you an idea, and assuming the screen is refreshed (displayed) 60 times per second (60Hz/FPS), Ranger would have required just over 14 times the memory bandwidth compared to OCS (the original chipset): a stratospheric value for those times, to say the least.

This is why it would have been necessary to resort to expensive VRAM memories (which ideally could have doubled the memory bandwidth). That, however, would not have been sufficient on their own, as they required a data bus of at least 32 bits. Adding this would have resulted in a factor of 4, which is still a long way from 14.

At this point, the only other possibility would have been to increase the frequency of the memories by at least a factor of 3. So 90-95ns memories would have been needed: perhaps available at the time, but certainly not in the mainstream/consumer sector.

I think it is clear that this is not science fiction, but it is very close: these were realistically unattainable goals. You can be as visionary as you want, but projects need to be realised in commercially viable products, and this certainly was not.

It is interesting to note (and take note!), however, that increasing the colour palette from 32 to 128 would have required a certain amount of transistors. Again by way of comparison, 128 colours represents about half of all the registers of the original chipset.

One might think that this is also something that is not feasible, but fortunately, technological advances in manufacturing processes have allowed us to double the number of transistors packed into chips every two years (an observation that led to Moore’s Law). Which is compatible with the timing (the first Amiga, the 1000, dates back to 1985).

1987: Fat Agnus

With the arrival of the 500 to 2000 models, Commodore also introduced a new version of the most important chip of the trio, Agnus, which in the PLCC version became a little bigger:

of the previous DIP version:

Although two years have passed, unfortunately the only innovation it brings is support for 1MB of memory instead of the previous 512kB. With a big well!, because frankly the work that has been done leaves a big bitter taste in the mouth.

This is because in reality this MB is split into two parts: 512kB of Chip memory (always present in an Amiga) and 512kB of Slow memory (as it was rightly called) in case the system has been expanded (or is already present, as in the case of the Amiga 2000).

The tragedy with the latter type of memory is that it is the worst that has been devised, as it has only the disadvantages of Chip and Fast memory, and no advantages (apart from providing a little extra space).

In fact, on the processor side it works exactly like Chip memory: the CPU is the last wheel on the wagon, and can only access it if no other custom chip does. On the chipset side, it cannot access it in any way, because only the processor can.

This is, as can be seen, another “stroke of genius” on the part of Commodore engineers, who, in order to save on the cost of the memory refresh circuitry for this extra memory, severely castrated its use, with a huge impact especially in the gaming field.

1988: Fat Agnus with 1MB Chip memory

The problem was solved with the next version:

which allowed the extra 512kB to be used as chip memory (a wise choice, because that is what the Amiga needed most), but the omelette had now been made and the damage done was enormous.

This was because this additional memory was not exploited as one would have wished, except by the OS and applications. The games, in fact, were still developed for the configuration that had by then become widespread: 512kB of Chip memory, to which the 512kB of Slow memory (or Fast, if programmers were clever enough not to consider Slow exclusively) was eventually added.

Software houses could not do much, considering the installed base of machines for the Amiga market, so development costs had to take into account only one supported configuration, and nothing else.

This is because developing a game to take full advantage of a system with 512kB of Chip + 512kB of Slow/Fast and another with only 1MB of Chip is quite different and leads to equally distant results.

With 1MB of Chip, everything becomes enormously easier, and programmers generally only have to think about how to forage for custom chips, where the role of the processor assumes only that of a pure servant.

With 512kB of Chip and 512kB of Slow/Fast, on the other hand, there is a complete change of approach, as all the assets must be wisely divided up to make the best use of the 512kB of Chip available.

The other 512kB of Slow/Fast will be used for code (generally), data structures, and the musical score, which take up little space. What’s left over either remains unused (and that’s a lot!) or you have to find ingenious ways to load some assets into it, to be quickly copied into small buffers in the Chip and then made accessible to the custom chips (which I had to do, and heavily, with the games I worked on: Fightin’ Spirit and USA Racing).

A hell of a job for the developers (costing time and, thus, money for the software house), plus results that can be significantly different (e.g. Fightin’ Spirit could have had 1/4 of the screen with floor parallax, like Street Fighter II and different background animations, with 1MB of Chip). This discouraged exploiting systems with 1MB of Chip properly, unfortunately.

1990: ECS

The marketing of the Amiga 3000 also brought with it the new ECS chipset:

which introduces the following changes and new features:

- up to 2MB Chip memory;

- possibility of working on rectangular regions of 32768×32768 pixels for the Blitter (compared to 1008×1024 in the previous version);

- greatly improved genlock support;

- programmable video signal (instead of working only with NTSC/PAL) to create new modes;

- support for video modes with higher resolutions (VGA / 640×480 progressive/non-interlaced and Super-Hires 1280×200/256), albeit with a maximum of 4 colours (from a palette of only 64 in the case of Super-Hires);

- possibility of positioning sprites more granularly horizontally in Super-Hires mode;

- sprites displayed at the edges of the screen;

- addition of an Ultra-Hires screen and an Ultra-Hires sprite (using VRAM memory, plus several new registers in the custom chips) for a second independent screen.

This is a lot of new stuff, as you can see, but actually of very little general use. The Amiga was used extensively in television and video production, so improving genlock support was sacrosanct, given that the competition was not standing idly by, but it still remains a small (albeit important!) niche market.

The programmability of the video signal and the support for higher resolutions is a clear attempt to catch up with the explosion that PCs have had in terms of graphics cards with the introduction of VGA, but which does not impact much on the Amiga market (which has always been tied to the TV signal, NTSC/PAL/SECAM). Apart from the fact that the new resolutions leave a lot of bitterness in the mouth due to only four displayable colours.

Also of little use is the possibility of positioning sprites more finely horizontally. Some games could have exploited it for a smoother movement of elements such as clouds in the landscape, for example, but nothing so impactful. Ditto for the possibility of displaying sprites in the borders: useful perhaps for some demos, but not for games (which already had difficulty using borders to display normal graphics. A feature, this one, certainly more important).

There were also very few opportunities to exploit the larger areas of the Blitter ECS, because the games used the low resolution (320×200 in NTSC and 320×256 in PAL) with a maximum of 64 colours. It would have come in handy for the OS to move windows around the screen, for example, but even here with the high resolution and few colours (the Workbench used only four) it could have saved just a little programming for this coprocessor.

The only novelty that could have been appreciated is the support for the 2MB Chip memory, but as the ECS chipset originally only arrived with the Amiga 3000, the small number of models sold would never have justified the production of specific games (as illustrated above with the 1MB version).

The Ultra-hires screen and sprite, which most likely derived from the work that had been done with the aforementioned Ranger chipset (also due to the use of VRAM), deserve special note. No use is known of them, despite the considerable number of registers dedicated to them and, probably, several transistors used for its implementation. It was so useless that the future AAA chipset had removed them altogether.

In summary, and a good five years after the introduction of the first Amiga, Commodore presented only a reheated soup and nothing more (I remind you again that the access to the Chip memory remains that of the 68000, castrating the performance of all the new Motorola processors), completely ignoring the enormous steps forward that, instead, had been made by the competition in all fields/markets.

1990: Amber

However, the 3000 also introduced a brand new chip (so much for the alleged directive of Commodore management: “read my lips, no new chips“. Mantra that was used by some engineer to offload his own incompetence on the management):

which only serves to convert the video signal generated by Denise (the chip designed for this purpose) from interlaced to “progressive” (non-interlaced and using the 31khz multisync monitors now in vogue in the PC world).

This is a pale (and also quite costly) attempt to offer resolutions such as 640×400 (NTSC) or 640×512 (PAL) in 16 colours without the annoying interlacing and, therefore, suitable in the professional sphere.

Instead of investing all these resources in a completely new chip reserved for a niche (albeit an important one), it would have been better to divert them to modernising the original chipset, which would have benefited everyone, and with far better results.

In fact, it was a hole in the water, subsequently and easily surpassed by the AGA chipset, albeit only after two long years, given that in the meantime the competition was churning out much better products in this respect (I recall that the 640×480 progressive 16-colour had already been made available by IBM with its VGA-equipped PCs in ’87).

1990-…: the DSP 3210 from AT&T

To get back on track particularly on the audio side, its engineers thought well (just “well”!) to look elsewhere to solve the now huge gap that had been dug, finding it in AT&T’s DSP 3210:

We have already talked about this extensively in the first article of the series dedicated to audio, so there is no need to add anything else, except that it was another stroke of genius (!) from the brilliant minds that had taken over the development of the platform after the original group left.

A chip that, in the end, never saw use in any Commodore product, as it was supposed to be used in future products, but the company went bankrupt in 1994, so nothing more was done with it and it remained just an experiment.

1992: AGA

Instead, the marketing of the Amiga 1200 and 4000 saw the introduction of a new chipset:

which brings with it several innovations. It took a good seven years (since the OCS), but it was finally possible to get our hands on and exploit something really significant:

- up to 256 colours from a palette of 16 million for all resolutions (i.e. also Super-Hires, VGA, etc.) thanks to the quadrupled memory bandwidth (provided by the 32-bit data bus for the chipset as well, plus the use of fast page mode);

- 8-bit extended HAM mode (HAM8) to display up to 262 thousand colours (from 16 million);

Dual Playfieldmode that finally comes with 16 + 15 colours, with the possibility of choosing the colour palette to be used from the 256 available for the second screen;- sprites up to 64 pixels wide, with the possibility of choosing the colour palette to be used from 256 independently for “even” and “odd” ones, and setting the horizontal resolution to be used for them (e.g.: sprites in Super-Hires even if the screen is in low resolution);

- possibility of positioning sprites and also screens in a more granular manner horizontally in all resolutions;

- other improvements to genlock.

On the genlock, as well as on the sprites and screens with more horizontally granular positioning, the same considerations already made regarding the ECS apply, to which is also added the choice of horizontal sprite resolution: features of limited usefulness.

The lion’s share is obviously taken up by the colour extensions, which have made a leap forward in the purely visual part (and only that, unfortunately!) of the platform, which, however, still lags far behind the competition, which for years now has been able to count on 32/64 thousand colour (High Color) and 16 million colour (True Color) modes, as well as much higher resolutions (as well as video refresh rates).

To give you an idea, the first SVGA video cards (we are talking about a good five years earlier: 1987) already supported resolutions such as 640×480 (progressive; not interlaced) with 256 colours, which is the maximum the AGA can support with the available memory bandwidth.

What remains embarrassing, to say the least, is the computational capacity, which has remained exactly the same: the Blitter hasn’t changed one iota since the ECS (which in turn hasn’t changed much since the OCS, as we have seen), and thus also what it can do in terms of performance.

The only advantage comes to it indirectly, from the fact that the quadrupled memory bandwidth for just displaying the screens allows it to gain more free slots for access to the Chip memory for the same resolution and number of colours displayed (and manipulated), but we are a long way from the gibberish that was read back in the day (like double-speed or even quadruple-speed Blitter!).

According to calculations I made some time ago and shared in the Amigaworld forum (the largest generalist portal for Amiga and surrogates), in low resolution (320×200/NTSC, 320×256/PAL) one could only go from 32 to 128 colours (5 to 7 bitplanes) with the same number of rendered graphic objects (thus the same number and size). Ditto when switching from Dual Playfield OCS/ECS (8 + 7 colours) to AGA (16 + 15 colours): you could move roughly the same number of objects of the same size.

Put it an another way, if you take any Amiga game in 32 colours or Dual Playfield, you could have made exactly the same game in 128 or 16 + 15 colours respectively, but without being able to add more graphic objects to the screen, or display larger ones, for example.

If you think about it, this is certainly not much of a step forward, compared to the quadrupling of the memory access bandwidth (which, however, was reserved for the video controller alone. And that is the plague of the platform).

Incidentally, the use of the higher bandwidth for the screen alone carries a hefty price to pay as far as games using horizontal scrolling are concerned: you lose seven of the eight 4-colour sprites if you use the maximum bandwidth (4x. The most coveted and useful one), thus with only one usable 4-colour sprite. A disgrace (!), forcing programmers to have to do without these precious resources and fall back on the Blitter (which, however, cannot do much, as we have seen).

Last but not least, Commodore’s “wonderful” engineers gave us the most useless thing game programmers could wish for: 64-pixel wide sprites. Which on a 320-pixel horizontal screen is equivalent to taking up a good 1/5 of it. You might think they might be fine for the so-called “final boss”, but at the cost of a huge waste of memory (because you can’t reuse graphics, as you can with tiles: that’s why you need small sprites, to be combined to form larger images, recycling graphics as much as possible).

However, the saddest thing is that the sprites always remain 4-colour (except when combined two at a time, to get to 16 colours), when otherwise exploiting double (2x band) or quadruple (4x band) read data, one could have had sprites with 16 or 256 colours respectively (or, at most, 16 colours and 32 pixels wide with 4x bandwidth instead of the canonical 16 pixels), which would have been far more useful.

The rest of the components, as already mentioned, have not changed at all, so it remains another big, indeed very big missed opportunity to make our platform of the heart competitive again.

1992-…: AA+

Covering at least in part the AGA’s too many shortcomings was to be the next chipset, called AA+, which was to arrive in 1994 for the new low-cost machines (for the top-of-the-range there would be AAA) and would also be 100% compatible with its predecessor.

Not much is known about its features (all the attention, media and user expectation, was reserved for the AAA), but from a few interviews and presentations the following leaked out:

- up to 800×600 at 256 colours due to double the bandwidth of the AGA (thus 8 times the bandwidth of OCS/ECS);

- 16-bit packed/chunky mode (32,000 colours), but only for resolutions up to 640×480 (again due to limited bandwidth);

- Blitter at twice the speed (with increased clock. Because no 32-bit version was mentioned);

- Chip memory up to 8MB (compared to 2MB);

- floppy disks up to 4MB at full speed;

- serial ports equipped with FIFO buffers.

Apart from serial, which would finally receive the FIFO buffer to avoid the torment of characters/bytes loss during transmissions, 4MB floppy disks would come at a time when the use of hard disks had become the norm, and CDs were now taking over as mass media (considering that software now required several floppy disks for distribution, due to the much “fatter” contents).

Otherwise, these are barely decent specifications for low-cost machines, if we take into account the fact that in another two years the competition had by then churned out much better. It is not, however, an attempt to be thrown out altogether.

In fact, the doubled-frequency Blitter seems to be going in the right direction, although it appears to be a timid attempt, if we consider that the chipset now works at twice the frequency of the AGA, which in turn works at twice the frequency of the OCS/ECS. On the other hand, it is also the key element on the performance level: if you increase resolutions and/or colours, but do not have enough computing power to manipulate them, it is difficult to think of using them profitably (as we will go into in more detail at the end of the AAA section).

Especially if you don’t even support the packed/chunky mode with the new Blitter, as there is no mention of it anywhere. So the processor would have to shoulder all the burden, clearly penalising the overall performance of the system.

It matters a little, if games had remained in 256 colours, but in this case the hitch of planar graphics remains. In fact, only this can be used: there is no reference to packed/chunky 8-bit / 256-colour graphics, which phenomena such as Wolfenstein 3D first and, above all, Doom later used to thunderously open the doors of 3D in the gaming / mass-market sphere.

Finally, and despite having 8 times the bandwidth of OCS/ECS, nothing is reported about the sprites, which, despite moving to the “64-bit” (4x bandwidth) of the AGA, had seen their width consequently increased to 64 pixels. Consistently, one would have expected an increase to 128 pixels, but maybe that’s for the best: as mentioned before, 64 pixels was already too much, and 128 pixels would have been ridiculous (and still 4-colour).

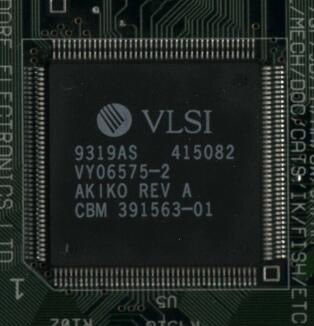

1993: Akiko

Trying to save the ship that was sailing in bad waters was supposed to be done by the first 32-bit console (and the first ever) of the house: the CD32.

Precisely in order to try and recover something in the 3D sphere, it was equipped with circuitry capable of converting packed/chunky (8-bit / 256-colour) graphics into planar (the only one capable of being used by the chipset) within one of its chips, Akiko:

The idea would not have been bad in itself, considering that there was no intention to modify the chips to directly support packed/chunky mode (as should have been the case long ago, and by that I mean long before the advent of 3D), but its implementation was blatantly wrong, in full “tradition” of the company’s engineers’ lack of foresight and ineptitude.

In fact, they decided to crucify the CPU, forcing it to supply the 8 packed/chunky graphics 32-bit data to be converted into 32 bits for each of the 8 bitplanes, which the CPU would then have to write into Chip memory (the only one available in this console).

This solution effectively castrated the performance of the conversion operation, halving it. In addition to completely blocking the CPU by acting as a carrier for the data that travelled back and forth in Chip memory.

The best solution would have been to provide Akiko with the screen address of the packed/chunky graphics, the screen address in planar format (with an appropriate module to address the 8 bitplanes correctly), and then use a DMA channel to read and write the data, speeding everything up (half the time would have been required, in fact), and at the same time completely relieving the CPU of this thankless and humiliating task.

Commodore engineers, on the other hand, “ignored” (?) the fact that Akiko already had DMA capability (for reading data from the CD), and even boasted that they had thought of their solution during a lunch break and implemented it in one day.

There would have been nothing more to be added, because the situation is self-explanatory, but a statement by Dave Haynie (a well-known technician who certainly needs no introduction) on the subject of 3D, which was made in an interview, leaves one stunned and dismayed, to say the least:

What was the earilest point you realised that games were going to go heavily into 3D and that the Amiga’s chipset was not going to be right for it (basicly when did you want to start work on a chipset geared up for 3D work?)?

Well, of course, I don’t do “big chip” design. I think the point at which we knew 3D would be important was probably a year or two before the CD32 shipped.

So the engineers were already aware of the importance of 3D in the chipset a year or even two before the CD32 or so went on sale, but… they did absolutely nothing! And they were reduced to the last moment with the horrible patch they then put into Akiko. I would say that any comment is superfluous at this point….

1988-…: AAA

On the other hand, the revolution that should have solved all these problems and brought the company back into the limelight should have arrived shortly afterwards, despite a very long wait, since the project had already begun in 1988 (other sources report 1989). Although it must be said that it had been put on hold a few times to divert engineers to other more urgent projects to complete, or for lack of funds to continue development.

Singular, however, is a statement made by Commodore’s technical manager, Lew Eggebrecht, in an interview in January 1993:

Tell me about AAA – it’s been worked on since 1989?

Yes, we worked on it from an architectural point of view for a long time but it’s only been serious for about a year. It was obvious that AAA was not going to meet our cost targets for the mid to low end systems. We wanted to continue that development an d we also had to have an enhancement quickly so, AA was the solution to that problem. It would have been nice to have AAA at the same time as AA but we just couldn’t get there.

So they only started working on it in earnest a year ago (since the interview. So 1992), whereas before that they had been engaged in discussions above the highest systems, evidently. Which is also well known, because it transpired that already for years the engineers did not know which direction to take regarding the evolution of the hardware: confusion reigned happily.

As is evident from the same interview, and in particular also from the part quoted here where it is candidly admitted that “obviously” (!) AAA was a too big/complex project for the systems that were the company’s main targets (the cheaper ones). So obvious, that it took a few years to finally become aware of it…

However, you can easily see this from the huge list of improvements and new features (taken from the technical document written by Haynie) that it was supposed to introduce (taking out those already mentioned for the AA+, which are incorporated here and for which the same considerations apply):

- 32- and 64-bit data bus using VRAM and clocked at up to 114Mhz, allowing resolutions up to 1280×1024 at 32768 colours depending on configurations (ranging from single system with DRAM memory, up to double system with VRAM);

- 2, 4, 8, and 16-bit packed/chunky pixels (4, 16, 256 and 32768 colours, respectively), including HAM8;

- 24-bit “hybrid” packed/planar mode (3 “byteplanes“: one per colour component, using one byte to store its intensity) to finally display 16 million colours without limits;

- “compressed” graphics modes;

- 10-bit extended HAM mode (for images with 16 million colours);

- extension of bitplanes from 8 to 16 to support Dual Playfield screens of up to 256 colours each (8 + 8 bitplanes), and the HAM10 (which requires 10 bitplanes);

- frame grabber to capture images & videos in packed/chunky mode;

- integrated genlock and an additional “overlay” bitplane (probably just for genlock);

- sprites up to 128 pixels wide;

- 32-bit Blitter with several times the performance of OCS/ECS (it seems up to 8 times, although there is no clear information on this), with support for planar and packed/chunky graphics, and several operations applicable in the latter case (sorting, summing, averaging, etc.);

- Copper capable of setting the new 32-bit registers, with the possibility of operating on several registers with a single instruction. It is also able to work on demand of the Blitter (when the latter has finished one operation, to take over another one);

- 8 channels of 16-bit audio up to 64kHz, with independent left and right volume up to 12 bits, and possibility to sample 8-bit audio;

- up to 16MB Chip memory;

- CD player port.

It is not difficult to see why it was another failure and never saw the light of day after all these years of more or less brisk work: a mammoth project, which would have required chips of around one million transistors (and thus very expensive) and yet not even that competitive.

Moreover, the engineers themselves were aware that, with its monstrous complexity and high cost, the AAA would have been nothing special had it been marketed in 1994. Again from the same Haynie interview:

During the AAA development, it was pretty clear AAA would be nothing special if it ever did ship, at least not in raw specs. In 1988, a 64-bit chipset that could do 1280×1024@60Hz, with 11 bits/pixel, would have been something. In 1994, the earliest ship date had C= not failed, it would have been an expensive also-ran (four chips for a 32-bit system, six for a 64-bit system, and you needed VRAM for performance).

“Only Amiga Engineers Make it Possible!“

What was boiling in the pot with AAA

However, and returning to the technical features, the arrival of the coveted packed/chunky graphics, with all denominations up to 16 bits, is noteworthy. It fills a huge gap, albeit very late, but the engineers decided to overdo it by also supporting the 2-bit (4 colours) and 4-bit (16 colours) format. Stuff that would have been needed at the end of the 1980s – beginning of the 1990s (and exclusively for 16 colours!), but completely irrelevant afterwards, when by then graphics had moved to at least 256 colours, and even more. You can see they had time to waste and transistors to throw away…

… which could have been used to support the 24- or 32-bit packed True Colour format, as is common in any other system. Instead they added the hybrid 24-bit format, but with the three colour components (red, green and blue) distributed over what are called byteplanes, where each byte represents the intensity of the specific colour component. A format born to castrate the system’s performance considerably, as manipulating pixels in this way can result in up to three times the bandwidth required (depending on the operation), as well as more CPU calculations (more on this later).

Of little use are the new compressed modes, as they can only be used to display static images (or precomputed animations), as was already the case with the famous HAM (OCS/ECS) and HAM8 (AGA) modes, which here have been extended to 10 bits in order to give the possibility of displaying 16 million colours (as opposed to the 262 thousand from a palette of 16 million in the previous HAM8).

Even the framegrabber for capturing images, the integrated genlock, and the overlay bitplane represent extremely niche features and, therefore, useless to most, although it must be said that the Amiga has always been synonymous with video, so it would have made sense to improve the platform from this point of view (albeit very belatedly).

Instead, to the list of foolish choices is undoubtedly added that of doubling the number of bitplanes, bringing them to an impressive 16 from the AGA’s 8. If there is one thing that all Commodore engineers (no one excluded!) have never understood, it is that planar graphics has far more disadvantages than packed/chunky graphics, than the (very modest) advantages it can bring, adding considerable complexity not only to the system, but also to the programmers to manage them all, and obviously with very bad repercussions on performance and space occupied. The choice was probably dictated by the introduction of the Dual Playfield mode with 256 + 256 colours (and for the HAM10), but it would have been much better if it had used two byteplanes (and two bitplanes + one byteplane for the HAM10), elegantly and efficiently solving the problem.

On 128-pixels wide sprites, I think there is very little to add to what I have previously said on the same subject regarding the AGA chipset, except that as a (former) game developer I find it really depressing to see how resources have been thrown at practically useless functionality, going in a completely wrong direction.

On the other hand, it was very pleasing to find in the AAA technical document improvements to the Copper that have long been desired, as I have also reported in some previous articles. I refer, specifically, to the possibility of being able to set several registers with the same instruction of this coprocessor. Not particularly interesting, on the other hand, is its ability to be woken up when the Blitter has completed its work, and this is because the Copper was born to “haunt” the electronic brush, so remaining synchronised to the video is its main purpose, and being interrupted at any time to do something else I find it dangerous, to say the least; but in the absence of other details, my analysis ends here.

Even on the completely different front, that of audio, epochal changes would finally arrive, considering that this component has never undergone any changes since the Amiga was born. In addition to the long-dreamed-of 8 channels, 16-bit samples arrived, and higher frequencies (64kHz: surpassing “CD quality”, which had been the minimum goal for a few years). All this is enriched by the more granular control of the volume, which goes from 64 to 4096 levels, as well as by the equally expected possibility of playing a channel freely on the left and/or right. Innovations that seem absurd, given that they completely contradict the various declarations of wanting to switch to the AT&T’s DSP to improve the audio compartment (rampant schizophrenia?), but which as a long-standing developer I certainly approve of, because the consistency and identity of the platform is maintained, even though 8 channels in ’94 are objectively too few by now.

With graphics and sound quite “burdened” it was inevitable that thought would also be given to expanding the amount of Chip memory (remember that it is the only one accessible by custom chips) to a good 16MB, in order to contain the increasingly chubby assets. On the other hand, CD-ROM support was also not added by accident, but to meet the greatly changed needs of data storage (especially multimedia). So both additions are certainly sacrosanct and welcome.

Focus on Blitter and AAA performance

Finally, the Blitter. I’ve left it for last because, in my humble opinion, it represents the central and most important element of the whole Amiga architecture: the one that has always taken care of the most onerous work and that, therefore, we tried to exploit as much as possible. As already mentioned above, there are no clear indications about its performance, but in some interviews there is talk of 8 times the speed of the original one (in OCS/ECS), which obviously derives from the extension from 16 to 32 bits (which, however, is not always exploitable, as already demonstrated in the previous article) and quadrupled working frequencies. It also supports packed/chunky modes, and in the case of the 16-bit one (and only for this one, at least from what transpires from Haynie’s technical paper, which refers exclusively to the “Chunky” mode in this case) it would allow various operations to be applied to the data read from the channels.

There is no doubt that this is a lot of stuff, but this is always in the perspective of comparison with the previous version, which, however, dates back a good nine years (1985). In the meantime, technological evolution led first to 256 colours, then to 32/64 thousand (High Colour) and finally to 16 million (True Colour. In 24 and 32 bits sauce. The latter with the addition of the alpha channel to specify colour transparency/opacity). From this point of view and lacking direct support for True Colour on the part of the video controller, which was only implemented with the aforementioned 24-bit hybrid mode (3 byteplane = 3 x bytes), it was not implemented in the Blitter either, so it is not possible to apply the new operations on the channels (which in the hybrid mode are treated as packed/chunky graphics at 8 bits = 1 byte), in addition to the reduced general performance caused by the division of the 24-bit graphics into three parts.

It is precisely performance that is the crucial aspect that, unfortunately, has not been adjusted accordingly to the improvements in terms of displayed colours and resolutions. In order to understand how poor the performance of the new Blitter is, one must compare it to the increase in purely visuals from the OCS of ’85 to the AAA of ’94. If in ’85 games were made with resolutions of 320×200 at 60 frames per second and with 5 bitplanes = 32 colours (I’m taking this scenario as an example for purely illustrative purposes, without going too much into the complexity of the various Amiga games), with the basic version of the AAA (so with a single chipset and DRAM memories) it is possible to reach 800×560 at 10 bitplanes, equal to a good 14 times. Even assuming the use of packed graphics, which saves around 25 per cent bandwidth compared to planar graphics in more complex processing, it would still be a factor of 10 that remains higher than the new Blitter’s 8x. If we then move on to the maximum configuration, with dual chipsets and VRAM memories, to 1280×1024 at 16 bits, the factor becomes almost 66 and the comparison, at this point, becomes embarrassing.

The numbers perhaps don’t make it clear what the problem is, but changing perspective perhaps makes it easier to realise why a Blitter that is at most eight times faster is inadequate. The concept that needs attention is what could be done in ’85 with the OCS. If, for example, you could move 10 graphic objects of a certain size on that screen and with those colours (32), to be able to do the same, identical thing but proportionally on an 800×560 screen and with twice as many colours (10 bitplanes), then you will need a Blitter 14 times faster. Otherwise you will necessarily have to cut something: move fewer objects and/or reduce their size and/or reduce the number of colours used. There is no escaping this, because there is not enough computing power.

That is why it would be essential to increase the performance of the Blitter much more, with higher operating frequencies and, in the case of the dual chipset, also the size of the manipulated data. The problem is that, in the latter case, Commodore’s engineers once again thought it best to make life easier for themselves by reserving the 64-bit bus exclusively for the video controller chips (thus similarly to what was done with the AGA) and leaving the other chips (the equivalents of Agnus = Blitter & Copper and Paula = audio & disk) with only 32-bit access.

If there is one thing they have always been very good at, it has been bringing out decidedly castrated platforms…

1993-…: Hombre

If it had ever come to light, the AAA would have been the last chipset for the Amiga anyway, as Commodore had already decided to turn everything upside down and completely change the system, giving the existing one the boot: both the custom chips that had inaugurated the beautiful adventure with the Amiga 1000, and the Motorola processor.

In fact, a completely new platform was in development, internally called Hombre, based on a custom version of HP’s PA-RISC CPUs, to which one or more custom chips were flanked to manage graphics (with a strong vocation for 3D) and sound.

I will stop here, because I prefer not to go any further with an examination of it, as it would not have been an evolution of the machines we know well, but something totally new. So much so that the title of the technical documents is eloquent: “Beyond Amiga”.

Conclusions

Since this series is about the Amiga, and nothing else, the analysis of the various innovations that were brought or planned by Commodore for our beloved platform ends here. The next one will set out my view on when and how improvements could probably have been introduced taking into account the requirements and technological limitations of the time.