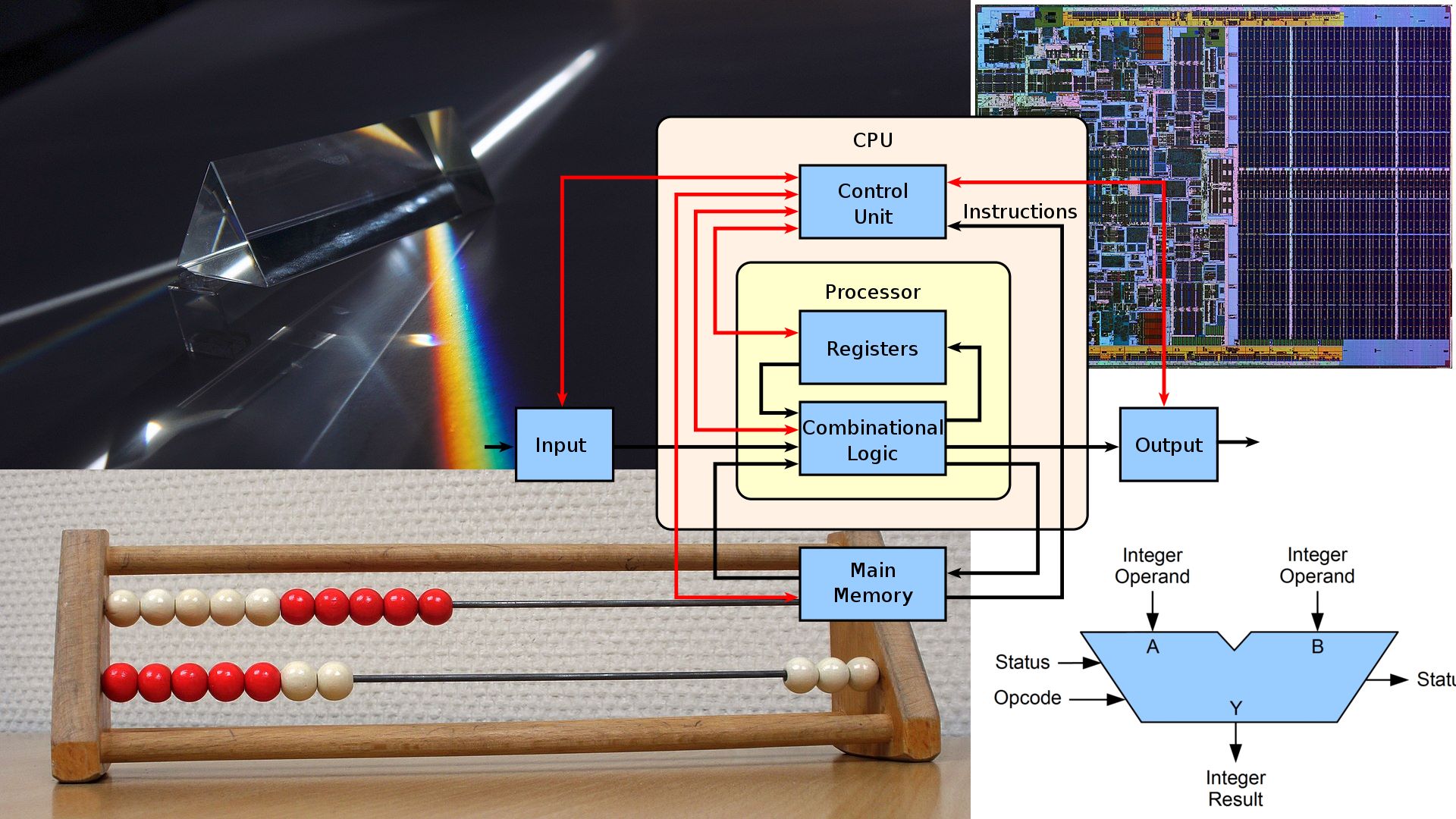

A scientific publication has recently appeared concerning what is being hailed as a revolution in computing: a CPU made with all-optical components, which on paper should not only be far more efficient, but also able to handle the typical tasks of current (electronics-based) CPUs, and for this reason, defined as “general-purpose“.

Since I have no expertise in the field of optics/electronics, I will limit myself to analysing a few points, mostly in the architectural sphere, giving my opinion and/or expressing my doubts on what is reported in the paper. Which purports to shed light on alleged clichés, but whose effect is diametrically opposed to expectations, as we shall see (reporting the salient parts).

Clichés about CISC CPUs… in reality they are not

Modern CISC (complex instruction set computer) architectures, such as x86, contain a multitude of instructions that are barely used, if at all, by most pieces of software, and were included to speed up very specific edge cases. The renewed interest in RISC (reduced instruction set computer) architectures is a clear indication, that this excess is not necessarily a good development. […] For general-purpose tasks on an x86, the most common instructions are MOV, ADD, PUSH and those discussed in Table II. But the availability of billions of transistors in electronic ICs has allowed the x86 instruction set to bloat to over 1000’s of instructions optimizing even the rarest of use.

Unfortunately for the authors, the situation is completely different from the one reported. In fact, the so-called RISC processors have had a very short life, since technological advances have, in fact, led to the demolition of the principles on which they were founded, as has been amply demonstrated here in a recent series of articles (The final RISCs vs. CISCs). At most, one can speak of L/S (Load/Store) architectures, but certainly not RISCs!

In fact, what are still being passed off as RISCs are anything but architectures with a reduced set of instructions, and this essentially for one reason: performance. Which, moreover, can be trivially verified by going through the list of instructions of such architectures.

One example is RISC-V (mentioned once in the article), which has long since exceeded 500 instructions taking into account all the extensions already ratified (to which more will be added as more are approved). I challenge anyone to continue talking about RISC = ‘reduced instruction set” when processors that are passed off as such have been integrating hundreds of them for several years now…

It is also true that x86 has many instructions and several are rarely used, but if they are there it is because they become very useful and make a difference when executed, as the example (which is certainly not the only one) of RPCSX3 (the most famous PS3 emulator for PCs) taken from a developer’s blog shows:

The performance when targeting SSE2 is absolutely terrible, likely due to the lack of the

pshufbinstruction from SSSE3.pshufbis invaluable for emulating theshufbinstruction, and it’s also essential for byteswapping vectors, something that’s necessary since the PS3 is a big endian system, while x86 is little endian.

The numbers are merciless, to say the least: from the version of code using SSE2 to that using SSE4.1 (whose contribution goes almost exclusively to a single PSHUFB instruction), the number of frames generated (in that scene, but it is an overall valid result) increases from about 5 to a good 166: a factor of 33 times! Clear proof of the unfoundedness (I would speak more of detachment from reality) of the authors of the publication.

The motivation is trivial: all architectures have, over time, added new instructions to the ISA precisely because there was (and still is) a need to improve performance in the presence of their precise execution patterns, although such new additions are rarely used overall.

The optical solution: one instruction

The approach chosen for the optical processor is, on the other hand, diametrically opposed: an architecture with a single instruction, SUBLEQ, is proposed. This has four parameters (A, B, C, and D for simplicity’s sake) and works in a fairly simple manner: it calculates A – B, puts the result in C, and jumps to location D if C is less than or equal to zero.

Now, it is clear that with such an instruction it is formally possible to perform any kind of calculation (it is a Turing-complete system), as is also evident from the list of instructions that can be directly emulated (given in table II of the publication), but at the cost of the very high price to be paid in terms of execution speed.

This is because more complicated instructions will require several SUBLEQs to achieve equivalent results. The paper gives a sort of comparison with the basic instruction set of RISC-V (called RV32I):

This makes SUBLEQ one of the simplest instruction set architectures (ISA) to implement, while retaining the full ability to run, for example, RISC-V RV32I instructions with a simple translation layer[50].

but if you check the publication cited (in footnote 50) on page 6, the situation is far worse than has been artfully embellished. Slightly more than half of the 37 RV32I instructions have relatively simple equivalents (requiring 4 to 16 SUBLEQs used and executed), but all the others need many more instructions and, above all, require the execution of internal loops to emulate their operation, which literally explodes the number of instructions actually executed and, consequently, lead to a huge performance impact (the numbers are rather telling).

The article often talks about the possibility of being able to run Windows and several times refers to Doom (“does Doom run on it?“), but is silent (and with good reason, at this point) on the speed at which they could be run…

In addition to instructions, bits are reduced: only 16 at a time

The thoughtful slimming treatment also extends to the number of bits that can be manipulated at a time, although in this case the publication does not go as far as that, but is reminiscent of the heyday of the computer pioneers of the first half of the 1980s.

In fact, after a remarkable digression on how nice it is to deal with just a few bits (even brushing up on the glorious 1970s), complete with suggestive advice to even avoid floating point numbers and rely on the good old 4- or 8-bit integers (I’m simplifying & romanticising, but the point should be clear), citing the recent trend for AI calculations, the proposed solution for optical processors is blurted out:

For an all-optical processor using the current generation of PIC technologies, a 16-bit wide arithmetic unit strikes a good balance between performance and gate count.

Which demonstrates, in my humble opinion, that it is not so much the desire to bring the motto “less is better” to purely computational computing, but rather one must make a virtue of necessity, as they say, due to the obvious limitations of current optical technology.

Because one can hardly blame electronics that churns out chips with billions of transistors (enclosed in a few cm2), only to then propose solutions using a few hundred (optical) “gates” to save on the complexity of arithmetic operations (which is really only one: subtraction).

This choice is justified by the fact that 16 bits would be sufficient in most cases:

16-bit integers are wide enough, that the majority of the numbers that are currently represented by 32-bits or 64-bits in software experience a minor performance hit, as they rarely exceed a value of 65,535 (examples include most counters in loops, array indices, Boolean values or characters). Should the need arise to represent a 32-bit or 64-bit value, the performance loss is on a manageable order of magnitude for individual arithmetic operations.

which, however, is not corroborated by any source (although the paper is full of them elsewhere). We do not know, therefore, where they have come up with these numbers, which might be partly supportable if they were restricted to the immediate values used in the instructions (there are several studies on the subject, including with regard to offsets in instructions that refer to memory), but which certainly cannot concern the values manipulated by processors.

Examples to this effect (which contradict the thesis) can be found galore, in the most disparate fields: from databases (id for keys?) to text editors (ever had to deal with files with more than 65536 lines?), to video game scenes & assets, and one could go on and on.

The suggestion, in these cases, would be to concatenate 16-bit values to cover the needs, but the costs are not at all manageable by an architecture that has only one instruction to do everything (when current architectures provide flags and/or instructions exclusively for the purpose of better handling these cases). As, moreover, demonstrated by the above-mentioned study of the use of SUBLEQ to emulate the few instructions of RISC-V’s basic ISA.

To this end, it is worth pointing out that “Doom runs on it” certainly does not have an easy life with such an architecture, considering that it was a clear break with the games of the past (where 16 bits might even have sufficed), explicitly requiring the use of 32 bits.

On the other hand, it could not have been otherwise, with the scene coordinates represented by 16 + 16-bit fixed-point integers. Which, however, clashes heavily with their manipulation, as mentioned above (shift operations, especially those on the right, are a disaster in terms of the instructions required for their emulation and, above all, those actually executed).

To which must be added the fact that, apart from the games (and applications) of the home computers of the late 1970s and first half of the 1980s, there was a need to be able to use far more than 64kB (Doom required as much as 4MB as a basic requirement).

From this point of view, it is not at all clear how the new optical system could address more than that using only 16 bits. Bank switching was in vogue in the mid-1980s, but exploiting memory in this way was complicated and also had a performance impact.

Still on the subject of memory, the authors of the publication are perhaps unaware that having pointers larger than 16 bits allows some of them to be used to map useful information using the pointer tagging technique. A masterful example of this is Apple’s implementation of iOS 7, thanks to the customised version of its ARM64 processors.

But the instruction opcodes are expanded

The choice of the standard/natural size of the data, combined with the operation of the only instruction available, entails the definition of the only opcode capable of being handled by the machine, which will have to be a bit “chubby” (taking up a lot of space), but extremely easy to decode:

As important as improving arithmetic throughput is, the most important aspect about our choice of 16-bit width is, that it is sufficient to enable fixed width instructions. This is vital to reduce the complexity, as it reduces one of the more problematic parts to implement on a processor, the instruction decoder, to a mere look-up table.

It is very easy to do some calculations to understand how this opcode is structured. In fact, SUBLEQ has four operands, each capable of referencing any memory location in the 16-bit address space. So it will occupy 4 x 16 = 64 bits = 8 bytes.

This is a remarkably high value when compared to that of other architectures (which have an average instruction length of around 3-4 bytes; with a few exceptions of only 2 bytes), offset in part by the considerable flexibility given by the possibility of referencing three operands in memory at the same time.

Unfortunately, this is not sufficient to solve the problems caused by the extreme simplicity of such a single instruction, which entails writing several instructions to perform tasks that other architectures solve with a far smaller amount, thus greatly increasing the size of applications.

Needless to say, the code density (a very important parameter in evaluating the quality of an architecture) will be greatly affected, as will the maximum length of programs that can run on such a processor (both code and data will have to fit in 64kB – the stuff of 8-bit processors of the 1970s!)

And “generality” is reduced: new memory model

The management & type of memory adds to the list of limitations of optical computing. In fact, the model being strongly pushed is that of read-only memory, which aligns perfectly with the optical model.

The considerations made are certainly valid, because we know that code is generally immutable (let’s forget for the moment the old practices regarding self-modifying code), as are most assets/data, while a small part requires changes during execution.

Two problems arise, however, with such a model. The first is that, although they do not change at runtime, the code and most of the data are still generated and must be stored somewhere and somehow.

The solution to this problem is a return to WORM (Write Once Read Many) technology, so write once to the mass medium (and this can be done very quickly) and read as many times as you want (at full speed).

The second problem is that you certainly cannot throw away WORM media every time code and/or data are updated (remember that code is generally only immutable during its execution. Ditto for assets).

The solution is to use RWORM (Resettable WORM) technology, which allows the media to be cleaned up so that it is ready to be reused for a (single) write, but taking a couple of orders of magnitude (100 times) longer than a normal write.

To mitigate this problem, the adoption of a COW (Copy-on-write) mechanism is proposed, which involves marking the locations (or, in general, blocks) of memory that have been modified, in order to proceed with their copying (with the modified parts applied later, in the COW models commonly employed).

Two possibilities are mentioned for its implementation: a hardware aid to apply the COW at the moment when the modifications take place, or an entirely software solution that offloads onto the compilers the responsibility of identifying the parts of the code that make the modifications to memory and apply the COW.

The suggestion is to adopt the latter (the former seems to be too complicated/onerous to implement), but this would also change the programming paradigm. However, we have seen what happens when you delegate too much to compilers (see Intel and HP with Itanium).

Forcing one programming paradigm (functional)

Needless to say, the adoption of the functional paradigm is proposed, whose languages implementing it are naturally biased towards memory management in terms of immutability.

The possibility of using languages such as C++ and Python in (purely) functional terms is also mentioned, but we know that they are certainly not suited to the purpose, even though they provide syntactic constructs and/or libraries for that.

It is, in short, neither natural nor convenient to write code using these languages, but to tie one’s hands and exploit only the functional parts. At this point, it is obvious that the best choice would be to rely on one of the languages that are naturally functional.

In any case, there would remain scenarios in which it is necessary to write to memory (e.g. for I/O, which is also mentioned in the paper), so eventually even the optical processor will need some conventional (volatile) memory for this purpose (the absence of which could, among other things, negatively impact performance).

In the end, such a device will in any case need three types of memory: ROM for absolutely immutable information, RWORM to store most of the information (code and data/assets), and a smaller “volatile” part for the conventional locations that are subject to changing values (too often).

No small complication, which programmers and compilers will have to deal with: certainly not the best for a system that aims to be “general”.

Conclusions

The publication continues with other considerations on the use of optics to implement arithmetic operations more efficiently, or on implementation details, (heavy) modifications to the software stack, etc., on which one could go on and on, but I prefer to close the analysis here because I think that what I have already seen is more than sufficient to understand all the limitations of these optical processors.

Beyond this, the authors dwell many times on feasibility when discussing certain choices and limitations that would turn anyone’s nose up at them. The point is that if a device like this is Turing-complete, there is no doubt that it would potentially be able to perform any kind of calculation.

This, however, certainly does not imply that it can be considered “general-purpose“, as we normally understand it, as it must be able to perform the tasks that current electronic devices are given to perform, but completing them better (with less energy consumption and faster).

The theory, in short, remains an end in itself, even to the extent that prototypes are presented and their characteristics outlined.

Better practice: does Doom (as repeatedly mentioned) run on it? Then I want to see it run better (faster) than current systems and consume less energy. Otherwise we remain in the realm of chatter (as with the phantom quantum computers that are supposed to replace today’s ones).