We are drawing to a close and I would like to take this opportunity to list the articles in this series:

APX: Intel’s new architecture – 1 – Introduction

APX: Intel’s new architecture – 2 – Innovations

APX: Intel’s new architecture – 3 – New instructions

APX: Intel’s new architecture – 4 – Advantages & flaws

APX: Intel’s new architecture – 5 – Code density

APX: Intel’s new architecture – 6 – Implementation costs

APX: Intel’s new architecture – 7 – Possible improvements

RISCs reached (?)

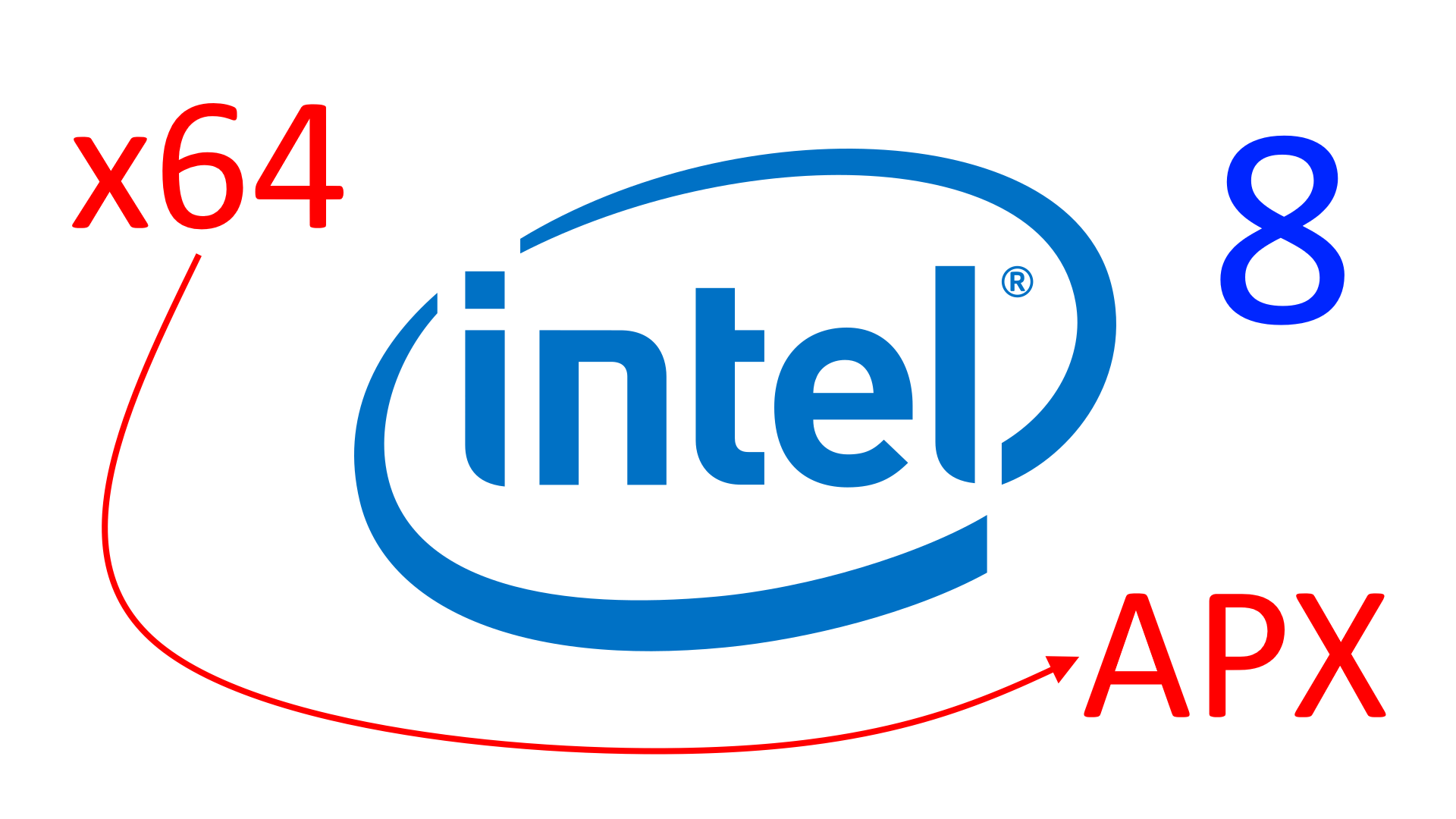

As I anticipated at the beginning of the first article, the change that APX brings is epochal and, in my humble opinion, certainly comparable to AMD’s introduction of x64 as a new architecture that extended the much more famous x86. The innovations and changes are such and so pervasive that we are faced with a genuine new architecture, although Intel has only presented it as an extension (and taking care to integrate it perfectly into the existing x64 ecosystem, in a completely backward-compatible manner).

I do not think I am exaggerating if I state that CISCs, of which x86 and x64 are celebrated members, have long since been the object of ridicule and mockery for the small number of registers they have generally made available in comparison with the more renowned and emblazoned RISCs.

In reality, these criticisms are unfounded, since there is no definition of CISC that states that it must have few registers and, conversely, one of RISC that states that it must have many. It is, in fact, merely a statement of the fact that chips labelled as such have these characteristics, but one cannot, from this, assume that the situation is and must always be exactly the same for any member of these two macro-families.

I don’t want to dwell on the subject, as I will reserve my analysis and reflections for the next series on the atavistic RISC vs. CISC diatribe, which will set the record straight both from a technical and historical point of view (the context is and remains very important), with the intention of putting a tombstone on the issue (assuming the facts still have any value).

Returning to APX, Intel ‘closes the circle‘ as well as the gap that separated it from other processor manufacturers by extending the general-purpose registers from 16 to 32. More registers are undoubtedly a convenience, as they reduce read and write requests to memory, although a CISC such as x86/x64 has much less need of them than a RISC, as many of its instructions are able to access memory directly, while others are also able to load or use immediate values even of considerable size (without, therefore, having to use special load instructions and ‘dirty’ other registers for the purpose).

What matters, in any case, is that with these 16 additional registers, another taboo falls, although there is a price to pay. A price that is mainly borne by the primitive design of a processor (the 8086x86 to x64: about 0.8 bytes more, on average, per instruction).

Intel stated, however, in the presentation of this new extension/architecture, that the results would be similar to x64 (in a preliminary simulation, using the well-known SPEC2017 benchmark). Which sounds decidedly strange, based on the analysis of prefix usage in several common scenarios that I provided in a previous article. Doubt that will be resolved with the availability of the new processors that will integrate APX and the binaries that will make use of them.

Ternary instructions

Another feature that has long been a source of blame for x86/x64 was the absence of ternary instructions (having two sources from which to draw the data to be used for the operation, and a destination in which to store the result). This deficiency was partly mitigated when some new instructions exploiting the VEX3 prefix were introduced (i.e. SIMD instructions), but still remained in the most common/general-purpose and used instructions (ADD, SUB, AND, etc.).

Similar with the unary instructions (NOT, NEG, INC, etc.), which had to use the same operand to specify both the source for the data to be processed and the destination of the result.

Intel has remedied both of these chronic shortcomings by allowing existing instructions (not all of them, unfortunately: only a few are privileged, for which this new mechanism can be used) to be extended to specify another argument (the destination register). And it has gone even further, allowing an operand to be specified in memory for either of the two sources.

A clear improvement that makes the architecture not only more flexible, but also faster (thanks to being able to perform ‘more useful work’ for instructions that benefit from the innovations made), as preliminary benchmarks seem to show.

And it bears repeating: this is a feature that has been missing for some time, and which can now finally be utilized and appreciated.

The weight of the legacy of 8086 (and successors)

As I have already stated in talking about the advantages and flaws, these two are the most significant innovations (the others, in my opinion, are of lesser importance and scope), but they leave a bitter taste in the mouth due to the approach used: to extend with the n-th ‘patch’ (the new prefix REX2, combined with the changes made to the existing EVEX) the existing x64 architecture, which with APX becomes even more complicated.

The 8086 was the progenitor of this very famous family of processors, but when it was born (a good 45 years ago), one could not have imagined what its history would be, nor could one have guessed the enormous impact that microprocessors would have in the technology and development of our own society. Therefore, when faced with competition, Intel had to put its hands to the project, extending it in some way, but with the objective of always preserving compatibility with existing software (which has also been its trademark).

Extensions that… came in the form of ‘patches’, in fact, looking for ‘holes’ (unused bytes) in the opcode table, so as to exploit them to add new instructions or prefixes, depending on what was needed. This is how the 80286 and 80386 came into being in the first place (because they were the processors that brought the most radical changes to the ISA).

Unfortunately, this is a common mentality that I detect in engineers who work on the design of processor architectures, and in particular when it comes to extending an existing ISA: exploiting some hole, left open somewhere, in order to implement a certain new functionality that has become necessary.

There are, unfortunately, not many signs of creative thinking aimed at defining original designs, with innovative and, perhaps, ‘elegant’ solutions to solve certain problems. Maybe it is also a matter of time (the infamous time to market), but making processors is not something that can be completed in some weeks.

This is also demonstrated by the last article in which I proposed changes to improve the implementation of APX: a job that took just a few days to complete, but which brings considerable benefits. In short, it didn’t take that long to come up with something that wasn’t the classic patch by quickly exploiting some hole found somewhere, with as little effort as possible…

Competition choices

It is certainly true that if one had a blank sheet of paper in front of one’s face and no (strong) prior constraints on which to ‘draw’, there would be much more room for the imagination to come up with more ‘beautiful’ (as well as efficient and/or performance) designs.

An example of this is RISC-V, which, although it started out as a university summer project, has since developed independently to the considerable diffusion and support that we know and the unstoppable success it is enjoying and will continue to enjoy in the future (mainly due to the fact that it has no licenses to pay).

But RISC-V is a ‘green project‘, as we say in the jargon: brand new, without any constraints to which it had to be subjected (apart from those self-imposed by its architects), and it was very easy to avoid making certain mistakes because those who designed it had learnt from those made by others and from the past history of other architectures.

x86, on the other hand, is a very old design, with an enormous support and software library behind it and which it has to account for. On paper, therefore, it could not afford anything of the sort: it has very heavy constraints to undergo, on pain of possible failure (the example of Itanium AKA IA-64, also by Intel, should be well known).

Exactly the same could be said of ARM and its also blazoned 32-bit architecture, which, however, had the courage to put its hands to the project and re-establish it on new foundations when it decided to bring out its own 64-bit extension, AArch64 AKA ARM64, which is not compatible with the previous 32-bit ISA (although it has much in common and bringing applications to it does not require a total rewrite).

It took a big risk, no doubt. Which, however, paid off by opening the way to several new markets, so much so that the company has now only focused on this new architecture (no developments have been seen for its 32-bit ISA, in all sauces).

The 64-bit evolutions of x86

Unfortunately, AMD did not have the same fortitude when it decided to give a 64-bit heir to the 80386, and its engineers preferred the easy route of patching it up just enough to get the job done, ending up, now, with x64. Ditto with Intel which, unfortunately, continues to do the same with APX.

These are painful choices, since this continual addition of patches to the architecture only increases its complexity, the difficulties in its realization, the implementation costs (including the testing and debugging of the circuits), and the related repercussions in the application field.

On the one hand, the ISA catches up (it was really a little with x64) with the competition, offering similar (even more advanced) features and improving performance, but on the other hand, it forces the ISA to drag around all this legacy, which ultimately makes it less competitive (the others have much less to carry around, in each core).

This might have been acceptable and made sense when x86 and x64 represented THE market and dominated everything from desktops to laptops, workstations to servers, and even supercomputers dedicated to HPC. But the era of smartphones and tablets has proved incontrovertibly that compatibility at all costs with these two architectures is not strictly necessary and, indeed, that it is sometimes even a burden, and recently the change has also come to laptops, then to servers, and finally to HPC systems, all of which have embraced different architectures.

Still clinging to binary compatibility could, in the end, result in a deadly embrace for Intel (while AMD has secured, at least for now, a future thanks to the console market. Which, however, historically, has seen different architectures succeed each other), if no one will be willing to pay for what is called the ‘x86 tax‘ without having, in return, substantial benefits (which the competition is not supposed to offer).

Blindly continuing in this direction will only increasingly relegate Intel to a niche market, reversing the role it has played so far. In short, the company should start asking itself what the real added value of its architecture is compared to others, and why it should be preferred over others.

At this point, it should be pointed out that innovations such as x64 first and APX later forced at least binary compatibility to be abandoned. In fact, x64 is only partially compatible with x86 at binary and source level, so that trivial recompilation of applications was not always possible, but required porting/adaptations.

Whereas formally this is not true for APX (although, in fact, this is what will happen for it too, as explained above). Intel itself emphasized in the announcement that a simple recompilation is sufficient to reap the benefits. But it is also true that recompiling a design for APX inevitably leads to incompatible executables for processors implementing only the other x86/x64 architectures.

One could, therefore, have thought of introducing a new architecture similarly to what ARM did with AArch64, definitively cutting the bridges with all the legacy (or, at least, most of it), and still offer a platform where the cost of porting the code would not be high (complete rewriting) or would be very small.

A recent, but very timid, signal in this sense has been given by Intel itself with X86-S (which I talked about in a recent article; it is in Italian), that is not a new architecture, but a version of x64 with a lot of legacy stuff removed, which is not really used by anyone anymore and that only serves to make the core heavier and to increase consumption (not all unused transistors can be turned off, even if Intel and AMD have done a great job in trying to minimize consumption).

It’s the classic ‘I wish I could, but I can’t’: there is an awareness of carrying around too much obsolete stuff that clips the wings of their products, but the company never finds the courage to make a clean break with the past, presenting something truly innovative and not viscerally tied to the umbilical cord of the legacy (however successful it may have been).

And then APX… and other proposals

This brings us, then, to APX, which is the child of this mentality/modus operandi still too heavily tied to the past and from which it seems impossible to escape. Yes, the innovations are all there, they are great as well as long overdue, but their structure & implementation are, as already widely discussed, another patch on a project that is now too obsolete (and this even using the improvements I suggested in the previous article).

A certainly more far-sighted strategy would have been to imitate ARM with AArch64 and thus design a new architecture with the desired innovations, but completely removing all the legacy accumulated so far.

Similarly, this would have been something more long-term, since the idea is to introduce the new ISA while supporting the old one (x86 and x64, specifically), so as to favour its diffusion, and with the final objective of giving the old architectures the boot once a certain critical mass has been reached in terms of available software and support, similar to what happened with x64 (which has now almost completely replaced x86: the most important and widespread OSes are no longer developed for the latter).

Obviously, this would entail a higher cost to be paid initially, since implementing a new architecture is not the same thing as adding a patch, even one as complex as that required to add APX to x64. Of course, it also depends on how the new architecture is designed, as it could also be simple enough to be cheap compared to the APX patch.

A further advantage with this approach would be to be able to realize the same thing for 32-bit code, i.e. a new 32-bit architecture. This is because currently the only one available in the world of Intel (but obviously the same applies to AMD) is the old x86, for which it is impossible to add the benefits of APX (at least as they are conceived), let alone use extensions such as AVX-512.

It might seem anachronistic to think of introducing a new 32-bit architecture, when the whole world is moving towards 64 bits, but a good 32-bit ISA has its intrinsic advantages, represented by the fact that pointers occupy 32 bits, in fact, instead of 64, allowing the size of data structures in memory to be reduced and, consequently, the pressure on the data cache (and, in general, on the entire memory hierarchy).

Then it is not even common for an application to use more than 4GB of (virtual) memory, so these could always be compiled to 32-bit in order to exploit the aforementioned advantages. It could be argued that modern video games are now all 64-bit because they require more than 4GB of RAM, but in reality, the vast majority of the space is occupied by assets (especially textures), so it is conceivable to continue to use 32-bit for everything that only the CPU has to process.

Finally, nothing detracts from the fact that a 64-bit OS can run 32-bit applications together with 64-bit ones, in a completely transparent manner, as is already the case now with x64 and thus being able to exploit the advantages of both.

Obviously, the two new 32-bit and 64-bit architectures should be designed to be extremely similar and with very few differences, so as to minimize implementation costs. All this always with a view to the future of getting both x86 and x64 completely out of the way and, with them, the considerable cost (several million transistors for the legacy part alone, for each individual core).

In fact, it should be pointed out, if it was not yet clear, that the proposal would be to maintain support for x86 and x64 for compatibility with the entire existing software pool, and at the same time enable the two new architectures for the new executables that would make use of them. For the first few years, the four architectures should co-exist, but in the long term, the two older ones would disappear to make way exclusively for the new ones.

Furthermore, processors with only the new architectures could also be marketed immediately in sectors (servers and HPC, for instance) where binary compatibility with existing software is not strictly necessary. This is because the advantages of having smaller cores that consume less could already be fully exploited, so as to be immediately much more competitive with the competition.

In the end?

We have, therefore, come to the end. And in the end, all that remains are the dreams of something really new, as you have already guessed from what has been written (and, in particular, in the last part). Which is certainly possible (bearing in mind the requirements of a possible evolution of x86/x64) and which I will discuss in a future series of articles.

As we have seen, thanks to APX Intel is catching up with the competition, it is true, but it will have to be seen for how long it will still be able to compete by dragging along a heavy heritage (which, in any case, is already limiting the markets it can operate in because of it).

The next (mini)series will tackle, as already anticipated, the longest-lived and everlasting debate in the field of computer architectures: RISC vs. CISC. With the audacity to … put an end to it.